Google on May 14 unveiled a suite of new artificial intelligence (AI) models along with a series of feature enhancements to its Gemini family of models at the Google I/O 2024 developer conference.

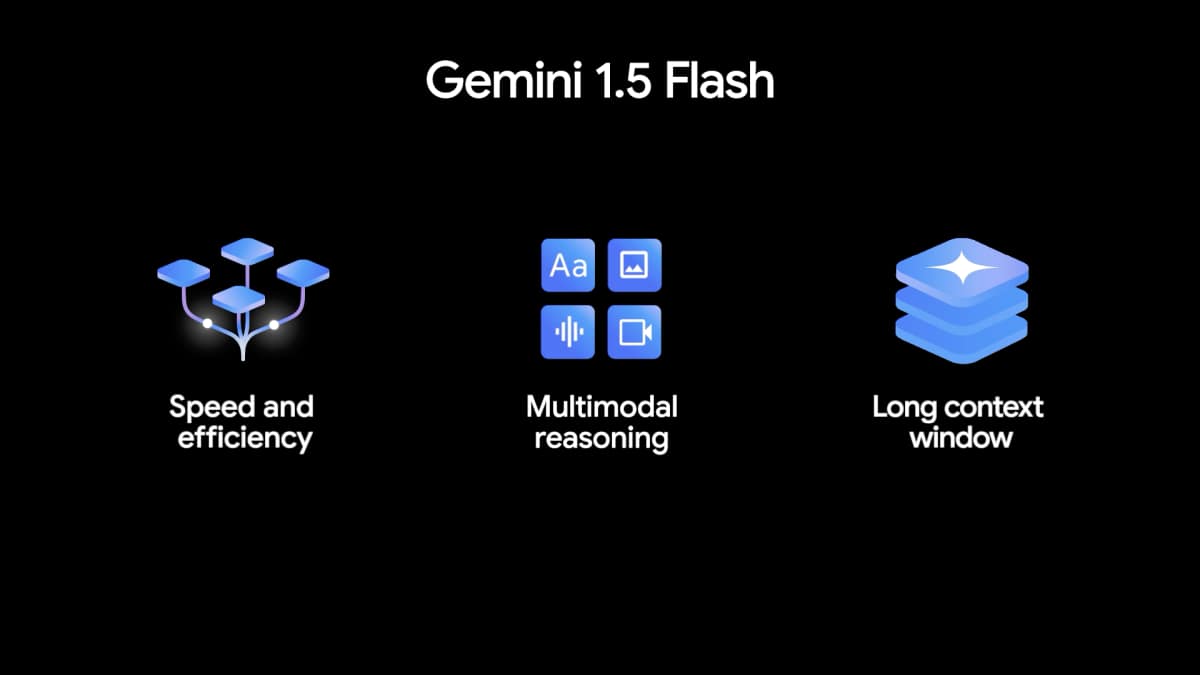

During the conference, Google announced Gemini 1.5 Flash, the newest model in the Gemini family that is designed to be fast and efficient. Google DeepMind CEO Demis Hassabis said the model is optimised for high-volume, high-frequency tasks at scale, where low latency and cost matter most.

These announcements come as the tech giant competes in a fierce battle for dominance with rivals such as OpenAI, Microsoft, Meta in the rapidly growing generative AI space.

On May 13, OpenAI announced a new flagship AI model called GPT-4o that can reason across audio, text, and vision in real-time and will be available to all free ChatGPT users.

Faster and efficient Gemini modelThe latest iteration of the model is more light-weight than Gemini 1.5 Pro, which was introduced in February 2024, but features the same context window of 1 million tokens, which the company claims is the longest context window of any large-scale foundation AI model to date.

Context window size determines how much data (words, images, videos, audio or code) a model can process at once. This essentially means that the bigger a model's context window, the more information it can take in and process in a given prompt.

Gemini 1.5 Flash is more lightweight than Gemini 1.5 Pro but features the same context window of 1 million tokens (Image Source: Google

Gemini 1.5 Flash is more lightweight than Gemini 1.5 Pro but features the same context window of 1 million tokens (Image Source: GoogleWith a 1 million context window, Google had earlier said that Gemini 1.5 Pro can process about 1 hour of video, 11 hours of audio, codebases with over 30,000 lines of code or over 700,000 words in a single prompt.

Hassabis said that Gemini 1.5 Flash is highly capable of multimodal reasoning across vast amounts of information and excels at summarisation, chat applications, image and video captioning, data extraction from long documents and tables among others.

This is because it’s been trained by 1.5 Pro through a process called “distillation,” where the most essential knowledge and skills from a larger model are transferred to a smaller, more efficient model, he said.

The Google DeepMind chief also announced a significantly improved version of Gemini 1.5 Pro AI model with enhanced code generation, logical reasoning and planning, multi-turn conversation, and audio and image understanding through data and algorithmic improvements.

Gemini 1.5 Pro can now reason across image and audio for videos uploaded in Google AI Studio as well as follow increasingly complex and nuanced instructions, including ones that specify product-level behavior like role, format and style, he said.

Gemini 1.5 Flash and the new upgraded Gemini 1.5 Pro will be available in public preview with a 1 million token context window on Google AI Studio and Vertex AI starting today.

Read: Ads, cloud, subscriptions: How Google parent plans to monetise its AI offerings

2 million context windowAlphabet CEO Sundar Pichai said they are also expanding the context window for both the AI models to 2 million tokens for developers in preview, available through a waitlist. "It's the next step towards our ultimate goal of an infinite context window," he said.

Gemini 1.5 Pro will be available to subscribers of Gemini Advanced, the company's paid AI chatbot tier, in more than 150 countries and over 35 languages starting today.

Google had first announced Gemini as a family of AI models in December 2023, expected to fuel the next generation of the company's AI advancements. This was the first AI model from the tech giant after the merger of its AI research units, DeepMind and Google Brain, into a single division called Google DeepMind, led by Hassabis.

In subsequent months, Gemini has become the main brand for all of Google's existing and future AI efforts, similar to what Microsoft is doing with its Copilot brand.

"Gemini is getting us closer to turning any input to any output, so you can think of it like an I/O for a new generation," Pichai said.

The new Gemini Nano and Gemma 2.0Gemini Nano, the smallest version of the Gemini AI model, can also now process images in addition to text. This means that applications using Gemini Nano with multimodality capabilities will be able to understand the world the way people do — through sight, sound and spoken language, starting with Pixel smartphones.

Google also introduced PaliGemma, the company’s first vision-language model in the Gemma family of open-source models. Vision language models are a type of AI model that is designed to understand and generate both visual and linguistic content, by combining computer vision and natural language processing capabilities.

PaliGemma is inspired by the tech giant’s PaLI-3 vision language model and is well-suited for tasks such as image captioning, visual question-answering, understanding text in images, object detection, and object segmentation among others.

Google also announced the next generation of its open-source models, Gemma 2, that will feature a new architecture for better performance and efficiency.

PaliGemma will be available to Google Cloud customers in the Vertex AI Model Garden starting May 14, while Gemma 2 will be available soon, the company said.

Apart from this, Google has unveiled a video generation AI model Veo and a new version of its text-to-image model Imagen 3.

Hassabis also shared a glimpse of Project Astra, the company's ambitious initiative to build universal AI agents that can help people in everyday life.

Event alert: Moneycontrol and CNBC TV18 are hosting the ultimate event on artificial intelligence, bringing together entrepreneurs, ecosystem enablers, policymakers, industry leaders, and innovators on May 17 in Gurugram. Click here to register and gain access to the AI Alliance Delhi-NCR Chapter.

Discover the latest Business News, Sensex, and Nifty updates. Obtain Personal Finance insights, tax queries, and expert opinions on Moneycontrol or download the Moneycontrol App to stay updated!

Find the best of Al News in one place, specially curated for you every weekend.

Stay on top of the latest tech trends and biggest startup news.