A Class 9 student in Florida, US, killed himself by suicide to be with "Daenerys Targaryen" — a lifelike artificial intelligence (AI) chatbot named after the leading character from Game of Thrones. The 14-year-old confessed to being in love with "Dany" and had spent months talking to chatbots on Character.AI, a role-playing app that allows users to create their own AI characters or chat with characters created by others.

Sewell Setzer III, who went by "Daenero" on the AI app, died in February. On the night of February 28, he told Dany that he loved her, and that he would soon come home to her. Sewell then put down his phone, picked up his stepfather’s handgun and shot himself.

His mother filed a lawsuit this week against Character.AI, accusing the company of being responsible for his death, The New York Times reported. She called the company’s technology “dangerous and untested” and said it can “trick customers into handing over their most private thoughts and feelings.”

Despite knowing that Dany wasn’t a real person and that its responses were just the outputs of an AI language model, Sewell developed an emotional attachment anyway. There was also a message displayed above all their chats, reminding him that “everything Characters say is made up!” But he kept engaging in long role-playing dialogues with the bot frequently sharing his life updates.

Their chats sometimes got romantic or sexual, The New York Times reported, but other times, Dany just acted like a friend who would listen to him, offer sound advice, and always reply to his messages.

His parents and friends, did notice that he was pulling back from the real world, isolating himself and spending long hours on his phone but they never suspected that he had fallen for an AI chatbot.

When his mother accessed the app after Sewell's death, she saw that he had opened up to the chatbot about having suicidal thoughts.

Here's an excerpt from the conversation published by The New York Times:

Responding to the death, Character.AI said they are "heartbroken" by the loss and that have been adding new safety features to the app. "We are heartbroken by the tragic loss of one of our users and want to express our deepest condolences to the family. As a company, we take the safety of our users very seriously and we are continuing to add new safety features," it wrote on X and shared a link to their blogpost on community safety.

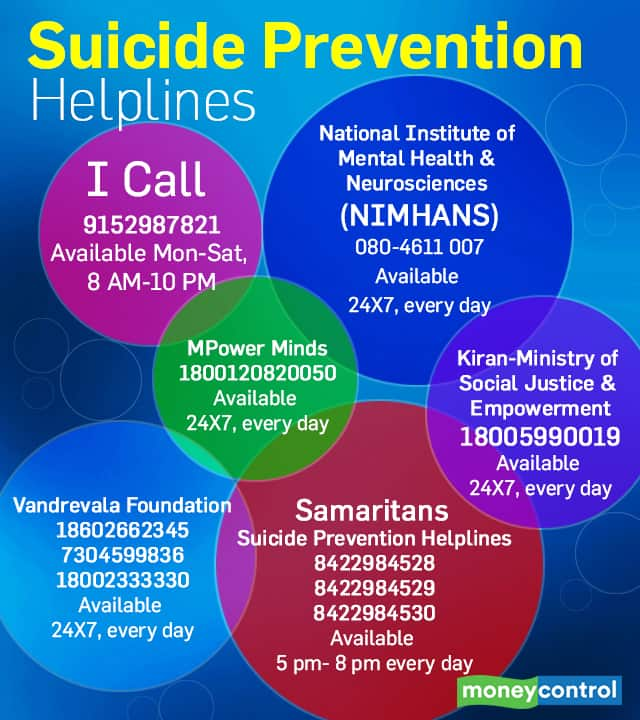

Suicide prevention helpline resources

Suicide prevention helpline resources Discover the latest Business News, Sensex, and Nifty updates. Obtain Personal Finance insights, tax queries, and expert opinions on Moneycontrol or download the Moneycontrol App to stay updated!

Find the best of Al News in one place, specially curated for you every weekend.

Stay on top of the latest tech trends and biggest startup news.