The artificial intelligence arms race is in full swing, and companies are not shying away from throwing some punches with their latest product launches. In some instances, the gloves are even off.

Earlier this week, Google unveiled its "most capable" AI model, Gemini, marking a significant move in the ongoing race for AI supremacy against OpenAI's GPT-4 and Meta's Llama 2.

Built from the ground up, Gemini boasts 'multimodality,' allowing it to understand and work with different types of data simultaneously, including text, code, audio, images, and video.

The AI model will be available in three different sizes: Ultra (for highly complex tasks), Pro (for scaling across a wide range of tasks) and Nano (on-device tasks).

This is the first model from Google DeepMind's stable, following the merger of the search giant's AI research units, DeepMind and Google Brain.

OpenAI's ChatGPT, powered by GPT-3.5, sent shockwaves through the world after its launch late last year and quickly became the talk of the town. While Google was initially caught off guard, it is now showing signs of finally getting its hands dirty and preparing for a fight.

And yes, with the launch of Gemini, it has made some waves with its claims. But is it as good as it claims to be?

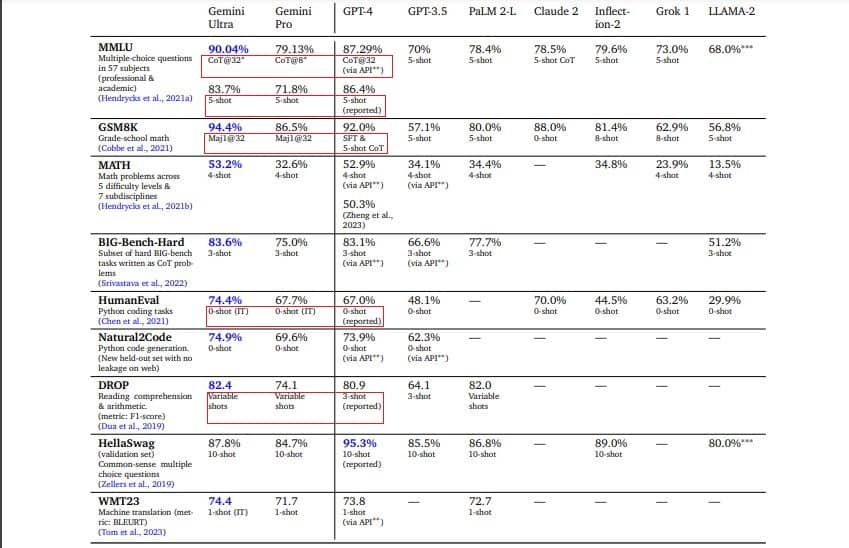

Google DeepMind states that Gemini surpasses GPT-4 on 30 out of 32 standard performance measures, though it's important to note that the margins are narrow.

Also read: What powers ChatGPT and Bard? A look at LLMs or large language models

While the company has successfully presented a 'Jetsons' dream to the public, concerns about its accuracy are now taking centre stage.

The technical report

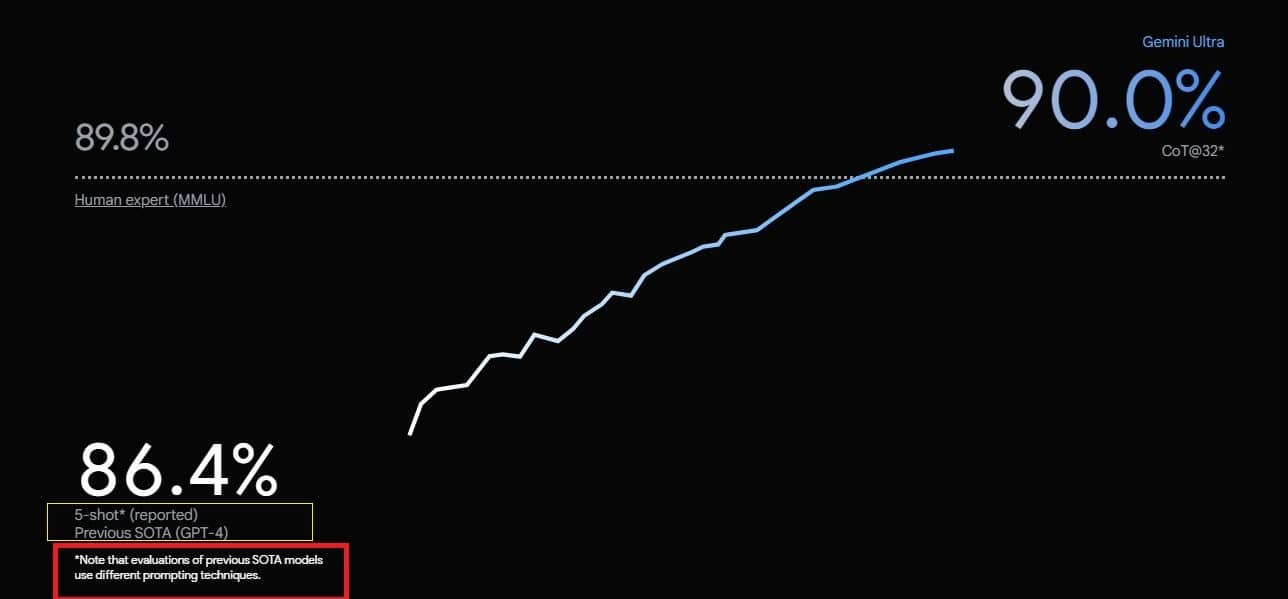

Google conducted several benchmark tests comparing Gemini with GPT-4. Gemini achieved an impressive score of 90 percent on the Massive Multitask Language Understanding (MMLU) test, surpassing human experts (89.8 percent) and outperforming GPT-4 (86.4 percent). MMLU uses a combination of 57 subjects such as math, physics, history, law, medicine and ethics for testing both world knowledge and problem-solving abilities.

However, it is important to note that Google used different prompting techniques for the two models. GPT-4's score of 86.4 percent relied on the industry-standard "5-shot" prompting technique. In contrast, Gemini Ultra's 90 percent result was based on a different method— "chain-of-thought with 32 samples."

It's also worth noting that Google conducted these tests using an outdated version of GPT-4, as indicated in the yellow box in the image above. The note mentions that they used a "previous state-of-the-art" (SOTA) version for GPT-4.

When both models were assessed using the 5-shot MMLU, GPT-4 scored 86.4 percent compared to Gemini Ultra's 83.7 percent. And while using the 10-shot HellaSwag, a benchmark for measuring commonsense reasoning, GPT-4 scored 95.3 percent, outperforming both Gemini Ultra (87.8 percent) and Gemini Pro (84.7 percent).

The term "shot" in the context of machine learning refers to the number of examples or instances provided during training. For example, in few-shot learning, a model is trained on a small number of examples per class. The number, such as 5 shot, would indicate that the model is trained with only five instances of each class.

As for chain of thought (CoT), it refers to the logical progression or sequence of steps that an AI model takes to arrive at a decision or output. Simply put, CoT prompting is guiding the model to think step by step before an answer is generated.

Moreover, Google used different prompting techniques for other benchmarks like GSM8K (Grade School Math 8K) for grade school math reasoning, DROP for reading comprehension and arithmetic, and HumanEval for Python coding tasks.

In its technical report, Google said that Gemini Ultra model achieves the highest accuracy by using a chain-of-thought prompting approach. This approach involves generating a sequence of responses (chain of thought) using multiple samples (k samples), such as 8 or 32. The model considers model uncertainty and checks for a consensus among these samples. If a consensus above a preset threshold is reached, it selects that answer. Otherwise, it resorts to a greedy sample based on the maximum likelihood choice without the chain of thought.

Also read: ChatGPT turns one: A year of rapid growth and controversies

A greedy sample refers to selecting the most probable or likely next word or token at each step of sequence generation.

"What the quack?"

On the day of Gemini's launch, Google also presented a video titled "Hands-on with Gemini: Exploring Multimodal AI Interaction." The video highlighted the capabilities of the multimodal system in handling various inputs, creating a buzz across the internet.

However, the excitement quickly waned when reports revealed discrepancies, indicating that the demo video misrepresented the actual performance of the AI model.

In an opinion piece for Bloomberg, columnist Parmy Olson noted that "In reality, the demo also wasn’t carried out in real time or in voice." She also claimed that Google admitted to editing the video.

"In other words, the voice in the demo was reading out human-made prompts they’d made to Gemini, and showing them still images. That’s quite different from what Google seemed to be suggesting: that a person could have a smooth voice conversation with Gemini as it watched and responded in real time to the world around it," Olson wrote in the op-ed.

To be sure, the description of the video stated that “For the purposes of this demo, latency has been reduced and Gemini outputs have been shortened for brevity."

In a post on X, Oriol Vinyals, Google DeepMind’s VP of Research and Gemini co-lead, said that he is happy to see the interest around “Hands-on with Gemini” video.

"All the user prompts and outputs in the video are real, shortened for brevity. The video illustrates what the multimodal user experiences built with Gemini could look like. We made it to inspire developers," Vinyals said in the post.

Discover the latest Business News, Sensex, and Nifty updates. Obtain Personal Finance insights, tax queries, and expert opinions on Moneycontrol or download the Moneycontrol App to stay updated!

Find the best of Al News in one place, specially curated for you every weekend.

Stay on top of the latest tech trends and biggest startup news.