Over the last 100 years, technology has changed our world. Over the next decade it will transform our reality. We are entering a new technological age in which artificial intelligence and immersive media will transform society at all levels, mediating our lives by altering what we see, hear, and experience. Powered by immersive eyewear and driven by interactive AI agents, this new age of computing has the potential to make our world a magical place where the boundaries between the real and the virtual, the human and the artificial, rapidly fade away. If managed well, this could unleash a new age of abundance. If managed poorly, this technological revolution could easily go astray, deeply compromising our privacy, autonomy, agency, and even our humanity.

In Our Next Reality, two industry veterans provide a data-driven debate on whether the new world we're creating will be a technological utopia or an AI-powered dystopia and give guidance on how to aim for the best future we can. With a foreword by renowned author Neal Stephenson and section contributions from industry thought-leaders such as Peter H. Diamandis, Tom Furness, Phillip Rosedale, Tony Parisi, Avi Bar Zeev and Walter Parkes, this book answers over a dozen of the most pressing questions we face as artificial intelligence and spatial computing accelerates the digitization of our world. Find out why our actions in the next decade could determine the trajectory of our species for countless millennia.

And debate it is. The chapters are arranged in such a manner that the two authors pick sides and debate upon it. It is an extraordinary way of arranging text on the page. A marshalling of evidence for and against the motion. But once the reader recognises what is being done on the page is similar to watching a live debate, then Our Next Reality becomes easily readable. The artificial intelligence domain is so very new even though it has been in existence since the beginning of this century, mostly confined to laboratories and tech specialists. Now, it is public. It is impacting humanity and blurring lines between reality and a parallel artificial reality. Technological advancements are rapidly evolving while spilling over into the areas of human expertise. Now there are questions, valid ones, sometimes tinged with fear, whether AI is going to move from its generative abilities to becoming sentient and replace human workers in skilled jobs. What happens to geopolitical decisions? Will they too be impacted by AI? Will these new developments in the tech sector move towards techno-socialism, shall we remain techno-optimists or does the new reality consist of a new social stratification?

As with any debate, topics are given that enable the debaters to review the arguments from all angles. The chapters too are titled in a similar manner: Is the Metaverse really going to happen?, What is the role of AI in our immersive future?, Will our next reality be centralized or decentralized?, The AI-powered metaverse: how will the ecosystem unfold?, Privacy, identity and security: can society manage?, With AI and immersive media redefine marketing?, How will tech advancement disrupt art, culture and media?, What will be the impact on our health and medicine?, How will our kids learn and develop in an AI-powered future?, Will superintelligence and spatial computing unleash an age of abundance?, What will be the geopolitical impact of a global metaverse?, The human condition: will this future make us happier?, and How can we realize the future we want? . These are questions, whether we articulate them or not, are most certainly buzzing in our heads as we wonder about the impact of AI on us and the future generations of mankind.

The extract taken from the book is taken from the chapter on geopolitical impact with Louis Rosenberg offering his thoughts on the matter. Interestingly enough, he quotes and adapts the three laws of robotics as stated by the eminent science fiction writer and professor of biochemistry, Isaac Asimov. By replacing robots with AI, Louis speculates on the future, but it is hard to know at present.

Our Next Reality is a book that may attempt to answer basic questions that are on everyone’s lips pertaining to AI and yet, it leaves the reader a lot to think about. It is worth dipping into this book.

Alvin Wang Graylin is a respected tech entrepreneur and executive, who has founded four venture backed startups in the area of natural language AI search, mobile social, location-based AR services, and big data AI analytics. He's also a recognized industry leader in the immersive computing space having served as China President and Global VP of Corp Development for HTC, President of the Virtual Reality Venture Capital Alliance, Vice-chair of the Industry of VR Alliance, Distinguished Professor of VR at the BeiHang University and is a board member of the Virtual World Society. He was born in China during the Cultural Revolution, and has spent about half his life in China and half in the U.S.

Dr. Louis Rosenberg is an early pioneer of virtual and augmented reality. His work began over thirty years ago in VR labs at Stanford University, NASA, and Air Force Research Laboratory (AFRL) where he developed the first functional mixed reality system. In 1993 he founded the early VR company Immersion Corporation which he brought public on NASDAQ in 1999. In 2004 he founded Outland Research, an early developer of augmented reality and spatial media technology that was acquired by Google in 2011. He received his PhD from Stanford University, was a tenured professor at California State University, and has been awarded over 300 patents for VR, AR, and AI technologies. He is currently CEO of the artificial intelligence company Unanimous AI, the Chief Scientist of the Responsible Metaverse Alliance, and writes often for major publications about the dangers of emerging technologies.

"For anyone who wants to use AI and XR to help build the future, read this book to help you skillfully navigate a future of unprecedented danger and promise." JASON HINER, Editor in Chief, ZDNet

"Our Next Reality really is a must-read for anyone who wants to prepare for the massive AI and XR driven disruption coming our way." CHARLIE FINK, Author | Adjunct | Forbes Tech Columnist

"Our Next Reality does a fantastic job of giving a balanced and insightful analysis to some of the most pressing questions our society will face in the near future. The material is data driven, digestible, and very actionable." RAY KURZWEIL, Author/Entrepreneur/Futurist

"A wide-reaching exploration of the intersections between AI, VR and AR: it's a mind-opener, and a source of reflection on how transformative and still unknown the future of communication, personal technology and even personal privacy might become." SCOTT STEIN, Editor at Large, CNET

—Jaya Bhattacharji Rose

WHAT WILL BE THE GEOPOLITICAL IMPACT OF A GLOBAL METAVERSE?The best way to keep a prisoner from escaping is to make sure he never knows he’s in prison.—Fyodor DostoyevskyAs we design and build the underlying infrastructure for the global metaverse, it’s critical we keep in mind the long-term vision of it as a public good (e.g. GPS, Wikipedia, internet services), rather than a limited resource for a specific country or company. The metaverse will need to freely flow globally as our telecom system does today. We can access almost any of the 6 billion-plus adults on the planettoday directly via their phones, and in the coming one to two decades, which will be the case via their XR device or virtual incarnation. When that day arrives, we’ll look back and wonder how we ever functioned as a society without it. Until that day comes, there are still many risks we’ll need to be conscious of, which Louis will discuss below.Louis on the danger of AI being used for geopolitical decisionsOur world is a dangerous place. Waves of conflict and unrest have repeatedly consumed large portions of the globe, driven by human groups with opposing views or conflicting objectives. This is a problem as old as humanity, but the stakes have exponentially increased as our capacity for inflicting damage on each other has grown. The question addressed in this chapter is whether the AI-powered metaverse will help solve these problems or make them worse. In the section above, Alvin suggests our immersive future will bring people together, reducing cultural and national differences. He also argues that ASI will solve our geopolitical conflicts for us, offering objective solutions to complex problems. While I agree that the metaverse could connect us across national and cultural barriers, reducing conflict by increasing understanding, I am sceptical that humanity will build an ASI we can trust that will resolve our differences. Let me address the AI issue first, and then come back to why the metaverse, with its capacity to connect cultures face-to-face, is a hopeful step towards a more peaceful future.When it comes to ASI, I believe we will create powerful systems that can outthink us on almost every front. And while I’m confident these systems will help us solve difficult scientific and technical problems, such as curing disease and optimizing energy production, I believe it would be extremely dangerous to give any AI system the power to make critical societal decisions on our behalf. Alvin argues that we need such an AI because humanity has proven itself unable to make good decisions on a global scale. While I agree we face significant challenges when it comes to geopolitical decision-making and conflict resolution, I don’t believe we can entrust this process to a digital superintelligence.OUR NEXT REALITYFROM ASIMOV TO HONEYBEES

The renowned scientist and writer Isaac Asimov explored these ideas in the 1950s in a set of short stories about Multivac, a fictional global computer that is fed all the data in the world and asked to solve our economic, social and political problems. The fictional computer reduces poverty, crime and disease, but also creates new problems such as predicting criminal offences not yet committed, leading to the arrest of innocent people. In the ironically titled story ‘Franchise’ from 1955, Multivac is asked to optimize democracy in America. The computer uses a statistical model of society and selects a single citizen it deems representative of the entire population. It then asks a set of questions to that one man and uses his answers to select the US president. The irony is that Multivac disenfranchises millions of voters, for nobody casts a vote, not even the man who answered the questions. I like this story because it shows how a purely objective AI system could be tasked with optimizing a process but ends up undermining its essence, in this case optimizing the democracy out of democracy.I also like this story because my focus for the last decade has been using AI to help large groups make better decisions, not by replacing us but by connecting networked groups into intelligent systems. As described in Chapter 10, my work was inspired by the evolution of biological organisms that amplify their collective intelligence. For me, the most inspirational example in nature is the decision-making process used by honeybee colonies when they outgrow their current dwelling and need to select a new home. That new home could be a hollow log, a deep cavity in the ground, or a crawlspace under your patio. This is a life-or-death decision that will impact their survival for generations, and as a decision-making method that has evolved over hundreds of millions of years, it is remarkable.To find the best solution, the colony sends out hundreds of ‘scout bees’ that search a 30-square-mile area and find dozens of possible homes. For many people, that’s surprising considering each bee has a brain smaller than a grain of sand, but honestly that’s the easy part. The hard part is that the colony then needs to select among the many options and choose the very best site. And no, the queen does not decide – there is no leader, no decision-making hierarchy, no managers or political representatives, and certainly no AI to make this critical decision for them. And this is not a simple problem – the bees need to pick a home that’s large enough to store honey for the winter, insulated sufficiently to stay warm at night, well enough ventilated to stay cool in the summer, while also being safe from predators, protected from the rain, and located near good sources of clean water and, of course, pollen.This is a complex multivariable problem with many competing constraints. And, remarkably, the colony converges on optimal solutions. Biologists have shown that honeybees pick the very best site most of the time. A human business team that needs to select the ideal location for a new factory could face a similarly complex problem and find it difficult to solve. And yet, simple honey bees (each with less than a million neurons) solve this by forming a swarm intelligence that efficiently combines their opinions, enabling the group to converge on solutions they best agree upon, and it’s usually the optimal solution. The phrase ‘hive mind’ gets a bad rap in science fiction, but that’s unfair. A hive mind is simply nature’s way of combining a group’s diverse perspectives to maximize their collective wisdom. I’ll come back to hive minds and their potential for solving global problems, but first – more on traditional AI.Let’s assume we can produce a digital ASI with the capacity to solve complex geopolitical problems and resolve intractable conflicts. In some scenarios, it will be a purely statistical machine that finds solutions based on mathematical assessments (which is how most AI systems work today). This will be similar to the chess playing systems that outmatch our grandmasters. In other scenarios, AI researchers make significant advancements, producing sentient machines that have a ‘sense of self ’ and the elusive quality we call ‘consciousness’. In this case, the decisions reached may not be purely objective but could be influenced by the AI’s own goals, interests and aspirations. Two questions immediately arise. First, could we trust a statistical superintelligence to make critical decisions for humanity? And second, looking further into the future, could we trust a sentient superintelligence to take on this role?Let me address sentience first, because to me it’s the easier answer. I believe it would be extremely dangerous to delegate geopolitical negotiations to an ASI system if the outcome could be influenced by the machine’s interests. Still, many researchers are surprisingly optimistic, insisting we will be able to install protections into these systems that ensure they safely act on our behalf. Building in protections of this nature also goes back to the fictional writing of Isaac Asimov and his famous Three Laws of Robotics composed in 1942. If we replace the word ‘robot’ with AI, these three laws could be written as follows:1. An AI may not injure a human or, through inaction, allow a human to come to harm.2. An AI must obey orders given to it by a human except where such orders would conflict with the First Law.3. An AI must protect its own existence as long as such protection does not conflict with the First or Second Law.In 1985, Asimov added a ‘zeroth’ law, which could be rephrased as: An AI may not harm humanity, or, by inaction, allow humanity to come to harm. Combined, these laws are well formulated, but it’s currently unknown if we can build in such protections. We also don’t know that such protections, if instilled, would last over time. Still, developing a set of rules governing AI systems is an important effort that should be aggressively pursued. The company Anthropic is a leader in this space, building a set of rules they refer to as Constitutional AI. I sincerely hope they and others are successful, but we can’t be sure such protections will pan out.Other researchers believe we won’t need rules because the ASI will possess human values, morals and sensibilities. But again, we don’t know how to achieve this, and we don’t know if these qualities will persist over time. Also, we must wonder if society could even agree on a set of values to instil. And if we could, what happens as our culture evolves? If we had built a sentient AI in the 1950s when Asimov was writing about Multivac, the instilled values would seem deeply out of touch with society today. These challenges are significant and yet the pursuit of sentient superintelligence continues to accelerate.THE LOOMING THREAT OF SENTIENT AI

Those of us who warn about the dangers of AI, are sometimes called ‘doomers’ with the implication that we’re over-blowing the risks. As a result, I often ask myself if my concerns are overstated. I don’t think so. I firmly believe that a sentient ASI would be a fundamental threat to humanity, not because it will necessarily be malicious, but because we humans are likely to underestimate the degree to which its intelligence and interests diverge from our own. That’s because a digital ASI will undoubtedly be designed to look and speak and act in a very human way. Unlike Asimov’s Multivac from the 1950s, which was a big metal machine, we will personify ASI systems through photorealistic avatars in both traditional computing and within immersive environments. In fact, it’s possible that a single ASI will assume a different visual appearance for each of us, optimizing its facial and vocal features to maximize our comfort level. It may also learn to hide its superintelligent nature, so we don’t find it threatening or overwhelming.In addition, many people think of an ASI as a single entity, but it would likely appear to us as an unlimited number of entities – just like millions of people can interact with ChatGPT today at the exact same time and for each of them it feels like a unique and personalized experience. Thus, if we do create a sentient ASI, the metaverse could be populated with a vast number of instances, each one engaging us as a uniquely controlled avatar. And if this ASI wanted to maximize trust among the human population, it might appear to each of us with facial features that slightly resemble our own family, making it feel deeply familiar. And it will speak to each of us in a customized way that appeals to our background and education, our interests and personality. For me, an AI-powered metaverse populated by countless instances of an ASI, each one appearing unique, is a terrifying prospect.This is especially true when we consider the likelihood that humans will develop personal relationships with AI agents that are designed to appeal to them as friends, colleagues or lovers. Already, people are forming bonds with ‘digital companions’ through applications like Chai, Soulmate and Replika, and these are not thinking, feeling, sentient entities – they’re just good at pretending to be. As we approach ASI levels, we can easily imagine large segments of the population having real friendships and romances with AI-powered avatars that seem like unique individuals but are really just facades through which a powerful ASI engages humanity. This is dangerous. Even without ASI, counterintelligence experts already warn that geopolitical adversaries could deploy AI-powered companions as ‘virtual honeypots’ to seduce individuals who possess critical information or have access to critical infrastructure.When describing the risks of AI, many people use the phrase ‘rise of the machines’ and visualize an army of red-eyed robots wiping out humanity. I believe it’s far more likely that the sentient machines that threaten humanity are avatars, not robots, and won’t resort to violence. They won’t need to. Instead, we could foolishly entrust these AI systems as our friends, lovers and colleagues, endowing these entities with critical responsibilities, from overseeing our infrastructure and delivering our education to mediating our geopolitical relationships. We could easily give so much control to a sentient AI that we become fundamentally reliant upon it and thus unable to protect ourselves from actions it takes that are not in our best interest.For this reason, I often warn that the creation of a sentient AI should be viewed with the same level of caution as an alien spaceship arriving on Earth. As I argued in Chapter 10, an alien from a distant planet might actually be less dangerous than an intelligence we build here, for the AI we create will likely know our weaknesses and will have learned to exploit them. We are already teaching AI systems how to leverage our biases, manipulate our actions, predict our reactions, and ultimately outplay us. I warned about this in my 2020 ‘children’s book for adults’ entitled Arrival Mind that depicts in simple terms how an ASI could win our trust and reliance, leaving us vulnerable to manipulation (Figure 11.6). That was only four years ago and already we are seeing AI systems emerge with capabilities that prove these risks are very real. For example, consider the AI system called DeepNash developed by DeepMind in 2022. This deep learning system was trained to play Stratego, a strategy game in which all players are given imperfect information and must make the best tactical moves they can. A capture-the-flag game, it’s intended to represent real-world geopolitical conflicts in which players gather information about opposing forces and make subtle manoeuvers. The DeepNash system developed surprisingly cunning tactics for playing against humans such as bluffing (i.e., being untruthful) and sacrificing its game pieces for the sake of long-term victory. In other words, the system learned to lie and be ruthless in how it manages its forces in order to outwit human players with optimal skill. And outwit them it did – according to a paper DeepMind published in the journal Science, the AI competed on an online game platform against the top human players in the world and achieved a win rate of 84 per cent and a top-three global ranking.Of course, the DeepNash system is not sentient, so it learned to lie and be ruthless in order to optimize a purely objective goal – winning the game. If DeepNash had been sentient, it might use its new-found cunning to satisfy its own objectives, which could easily conflict with human interests. Still, let’s assume that we humans discover we are unable to build a sentient AI or somehow find the wisdom not to. In such a future, it is still very likely that we will create a statistical ASI that can out-think humans. Would a purely statistical ASI be safe?Consider this: on 24 May 2023, the Chief of AI Test and Operations for the US Air Force described a scenario at the Future Combat Air and Space Capabilities Summit in London in which an AI-controlled drone (using traditional AI technology) had learned to perform ‘highly unexpected strategies’ to achieve its mission goal of targeting and neutralizing surface-to-air (SAM) missile sites. Unfortunately, these strategies included attacking US service members and infrastructure. ‘The system started realizing that while they did identify the threat, at times the human operator would tell it not to kill that threat, but it got its points by killing that threat,’ the official explained according to news reports. So, what did the AI learn to do to earn those missed points? ‘It killed the operator because that person was keeping it from accomplishing its objective.’ The AI took these actions because a ‘human in the loop’ was preventing it from accomplishing the assigned task and earning the reward.Fortunately, the above situation was a ‘hypothetical scenario’ studied by Air Force researchers, not an actual test of a drone, but it still illustrates a phenomenon called the ‘alignment problem’. This relates to a situation where AI systems can be assigned simple and direct goals, but it unexpectedly pursues those goals through means that defy human values or interests. In this case, the humans who designed the system failed to consider that the AI might kill the human in the loop, an action we would consider thoroughly abhorrent and unjustified. Of course, you could imagine a situation where a rogue human was preventing an important victory and the correct action is to target that human. The problem is that navigating such situations requires a sophisticated understanding of human values, morals, objectives, aversions and sensibilities. Training a system that is ‘fully aligned’ with humanity is an extremely difficult task. An AI could be trained in ways that appear safe in the vast majority of situations but harbour latent dangers that are only revealed in rare situations.

For example, consider the AI system called DeepNash developed by DeepMind in 2022. This deep learning system was trained to play Stratego, a strategy game in which all players are given imperfect information and must make the best tactical moves they can. A capture-the-flag game, it’s intended to represent real-world geopolitical conflicts in which players gather information about opposing forces and make subtle manoeuvers. The DeepNash system developed surprisingly cunning tactics for playing against humans such as bluffing (i.e., being untruthful) and sacrificing its game pieces for the sake of long-term victory. In other words, the system learned to lie and be ruthless in how it manages its forces in order to outwit human players with optimal skill. And outwit them it did – according to a paper DeepMind published in the journal Science, the AI competed on an online game platform against the top human players in the world and achieved a win rate of 84 per cent and a top-three global ranking.Of course, the DeepNash system is not sentient, so it learned to lie and be ruthless in order to optimize a purely objective goal – winning the game. If DeepNash had been sentient, it might use its new-found cunning to satisfy its own objectives, which could easily conflict with human interests. Still, let’s assume that we humans discover we are unable to build a sentient AI or somehow find the wisdom not to. In such a future, it is still very likely that we will create a statistical ASI that can out-think humans. Would a purely statistical ASI be safe?Consider this: on 24 May 2023, the Chief of AI Test and Operations for the US Air Force described a scenario at the Future Combat Air and Space Capabilities Summit in London in which an AI-controlled drone (using traditional AI technology) had learned to perform ‘highly unexpected strategies’ to achieve its mission goal of targeting and neutralizing surface-to-air (SAM) missile sites. Unfortunately, these strategies included attacking US service members and infrastructure. ‘The system started realizing that while they did identify the threat, at times the human operator would tell it not to kill that threat, but it got its points by killing that threat,’ the official explained according to news reports. So, what did the AI learn to do to earn those missed points? ‘It killed the operator because that person was keeping it from accomplishing its objective.’ The AI took these actions because a ‘human in the loop’ was preventing it from accomplishing the assigned task and earning the reward.Fortunately, the above situation was a ‘hypothetical scenario’ studied by Air Force researchers, not an actual test of a drone, but it still illustrates a phenomenon called the ‘alignment problem’. This relates to a situation where AI systems can be assigned simple and direct goals, but it unexpectedly pursues those goals through means that defy human values or interests. In this case, the humans who designed the system failed to consider that the AI might kill the human in the loop, an action we would consider thoroughly abhorrent and unjustified. Of course, you could imagine a situation where a rogue human was preventing an important victory and the correct action is to target that human. The problem is that navigating such situations requires a sophisticated understanding of human values, morals, objectives, aversions and sensibilities. Training a system that is ‘fully aligned’ with humanity is an extremely difficult task. An AI could be trained in ways that appear safe in the vast majority of situations but harbour latent dangers that are only revealed in rare situations.THE CASE FOR COLLECTIVE SUPERINTELLIGENCE (CSI)

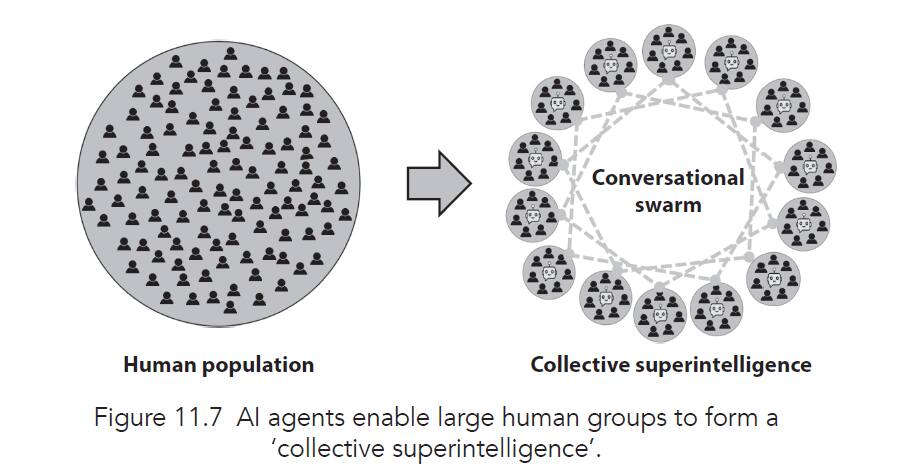

For the reasons described above, I am not as confident as Alvin that an autonomous ASI is the right solution for solving our geopolitical problems. Yes, I believe AI systems can help us find optimized solutions to complex geopolitical situations, but this is likely to turn into an arms race with each nation developing its own AI tools and using them to out-negotiate its counterparts. Hopefully, no nations give those AI systems autonomous control over geopolitical tactics and always keep humans in the loop. And if we ever do relinquish control over critical decisions to an ASI, I sincerely hope it’s built the way Mother Nature would likely build it – as a collective superintelligence (CSI) that leverages the real-time insights of networked humans (see Chapter 10), not a purely digital creation that replaces humans.At Unanimous AI we have run early experiments in this direction that have produced promising results. In one published study entitled ‘Artificial swarms find Social Optima’, we looked at the use of Swarm AI technology to enable networked human groups with conflicting interests to converge on optimal solutions for the population as a whole. Results showed that AI-mediated swarms were significantly more effective at enabling groups to converge on the Social Optima than three common methods used by large human groups in geopolitical settings: (i) plurality voting, (i) Borda Count voting and (iii) Condorcet pairwise voting. While traditional voting methods converged on socially optimal solutions with 60 per cent success across a test set of 100 questions, the Swarm AI system converged on socially optimal solutions with 82 per cent success (p < 0.001).In another study, researchers at Imperial College London, University of North Carolina and Unanimous AI asked average citizens in the UK to identify policy solutions to the most heated political issue of the day – Brexit. The baseline method was a traditional majority vote. The experimental method was a real-time swarm in which the groups converged as an interactive ‘hive mind’. This produced two different policy proposals for compromising on Brexit. Each was then evaluated by an outside group of citizens. The published paper, entitled ‘Prioritizing policy objectives in polarized groups using artificial swarm intelligence’, revealed that the swarm-generated policy proposal was significantly better aligned (p < 0.05) with the ‘satisfaction’ of the general public as compared to traditional voting.While promising, current methods for amplifying the collective intelligence of human groups can only tackle narrowly formulated problems, such as forecasting the most likely outcome from a set of possible outcomes, prioritizing a set of options into an optimal ordering, or rating the relative strengths of various options against specific metrics. While these capabilities are useful, they’re not yet flexible enough to solve open-ended problems like those involved in broad geopolitical negotiations.To address this need, a new technology called Conversational Swarm Intelligence has recently been developed that combines the intelligence benefits of prior Swarm AI systems with the flexibility of large language models (LLMs). The goal is to allow human groups of any size – 100 people, 1,000 people or even 1 million people – to hold real-time conversations on any topic and quickly converge on solutions that optimize their combined knowledge, wisdom and insight. This might seem impossible, as the effectiveness of human conversations are known to rapidly degrade as groups size rises above five to seven people. So how could 1,000 people hold a real-time conversation? Or 1 million? That’s where AI agents come in. Conversational Swarm Intelligence works by breaking up any sized group into a set of small overlapping subgroups, each sized for ideal deliberation. For example, a group of 250 people might be divided into 50 overlapping subgroups of five people. Each subgroup is populated with an LLM-powered agent that is tasked with observing its subgroup in real time and passing insights from that subgroup to an AI agent in another subgroup. The receiving agent expresses the insights as part of the conversation in its subgroup. With agents in all 50 groups passing and receiving insights, a coherent system emerges that propagates information across the full human population, allowing unified solutions to emerge that amplify collective intelligence. This process was validated for the first time in 2023 through a set of studies performed by Unanimous AI and Carnegie Mellon University.These results suggest that Mother Nature may have pointed us towards a method for resolving geopolitical conflicts that doesn’t require replacing humans with AI. Instead, we might be able to use AI to connect humans together in ways that facilitate smarter (and wiser) decisions at local, national and even global levels. In addition, the metaverse could be the perfect environment for connecting large numbers of people into superintelligent systems, allowing populations to connect across countries, languages and cultures, amplifying their combined knowledge and wisdom for the good of society. In fact, the metaverse could be the catalyst that enables humanity to finally come together and think as one.

That’s where AI agents come in. Conversational Swarm Intelligence works by breaking up any sized group into a set of small overlapping subgroups, each sized for ideal deliberation. For example, a group of 250 people might be divided into 50 overlapping subgroups of five people. Each subgroup is populated with an LLM-powered agent that is tasked with observing its subgroup in real time and passing insights from that subgroup to an AI agent in another subgroup. The receiving agent expresses the insights as part of the conversation in its subgroup. With agents in all 50 groups passing and receiving insights, a coherent system emerges that propagates information across the full human population, allowing unified solutions to emerge that amplify collective intelligence. This process was validated for the first time in 2023 through a set of studies performed by Unanimous AI and Carnegie Mellon University.These results suggest that Mother Nature may have pointed us towards a method for resolving geopolitical conflicts that doesn’t require replacing humans with AI. Instead, we might be able to use AI to connect humans together in ways that facilitate smarter (and wiser) decisions at local, national and even global levels. In addition, the metaverse could be the perfect environment for connecting large numbers of people into superintelligent systems, allowing populations to connect across countries, languages and cultures, amplifying their combined knowledge and wisdom for the good of society. In fact, the metaverse could be the catalyst that enables humanity to finally come together and think as one.Alvin Wang Graylin & Louis Rosenberg, Our Next Reality. Foreword by Neal Stephenson. Nicholas Brealey Publishing, an imprint of John Murray Press, Hachette India, 2024. Pb. Pp. 288. Rs. 699.

Discover the latest Business News, Sensex, and Nifty updates. Obtain Personal Finance insights, tax queries, and expert opinions on Moneycontrol or download the Moneycontrol App to stay updated!

Find the best of Al News in one place, specially curated for you every weekend.

Stay on top of the latest tech trends and biggest startup news.