The Department of Telecommunications (DoT) released draft standards recently for assessing the fairness of Artificial Intelligence (AI) solutions in the telecom sector.

"AI is increasingly being used in all domains including telecommunication and related ICT (Information and Communication Technology) for making decisions that may affect our day-to-day lives. Any unintended bias in the AI systems could have grave consequences," DoT said.

The draft standards were released by DoT’s Telecom Engineering Centre (TEC) wing on December 27, 2022.

The standards proposed by DoT provide a three-step process for assessing fairness of AI.

In response to the draft, earlier this week, industry body National Association of Software and Service Companies (NASSCOM) urged TEC to work with the Telecom Regulatory Authority of India to test the standards.

It also proposed that TEC collaborate with other regulators such as the Reserve Bank of India, Securities and Exchange Board of India and start a sandbox security mechanism where entities can test AI-enabled products before commercial rollouts.

This comes at a time when the Indian government is preparing to roll out the Digital India Bill, the much-awaited successor of the more-than-two-decades-old Information Technology Act, where it is speculated the government may introduce sections to ensure algorithmic accountability.

Let's take a look at what these draft standards assessing fairness in AI are, and what the industry has to say about this.

The draft standard document of the Department of Telecommunication divides the sources of bias into three parts: pre-existing biases, which are contributed by individual or societal biases; technical biases that arise from inconsistencies in coding and so on and emergent biases stemming from the feedback loop between a person and computer systems.

A feedback loop is a process in which the outputs of a system are circled back and used as inputs.

What are the factors influencing bias in AI systems?Biases in AI systems can be sourced down to the type of data being used to train the system; to the AI model being deployed and also, the kind of infrastructure behind the entire system, the DoT document said.

Who will use the proposed AI fairness standard?DoT envisages its uses among organisations and individuals developing AI systems, third-party auditors, organisations taking part in a tendering process for procurement, sector regulators and start-ups and Small and Medium Enterprises (SMEs).

How does one evaluate fairness in AI?Firstly, for evaluating fairness, 'protected attributes' or datasets that identify a person such as by race, nationality, and skin colour have to be identified. "The selection of protected attributes should be based on legal, ethical, and application context and may therefore be chosen wisely," said the draft standards.

"Demographic variants in India are extensive; therefore, one must be careful in identifying protected attributes, especially correlated ones. For example, there is a strong correlation between religion and location in various parts of India," it added.

Secondly, after the selection of the protected attributes, groups that experience 'systematic discrimination' or designated an 'unprivileged group' have to be identified. The draft standards said that the rest can be identified as 'privileged groups'.

Then, a 'favourable outcome' and 'unfavourable outcome' have to be identified, which would provide an advantage or disadvantage to a recipient. "For example, in a loan prediction case, a favourable outcome corresponds to getting the loan...," the draft reads.

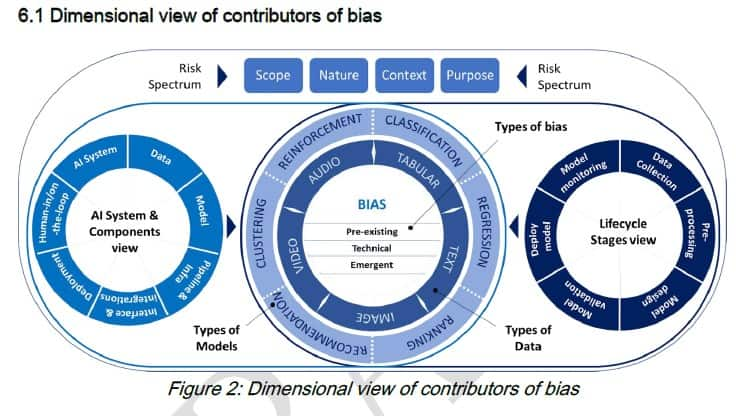

What is the proposed assessment framework for detecting fairness in AI?The draft standards divide the fairness assessment framework into two parts – a dimensional view of the contributor of bias and approach towards conducting bias assessment.

Firstly, the dimensional view of contributors of bias defines the types of biases, the types of data, models, lifecycle stages of AI, the risks associated with the bias, and shows how they are interconnected with each other.

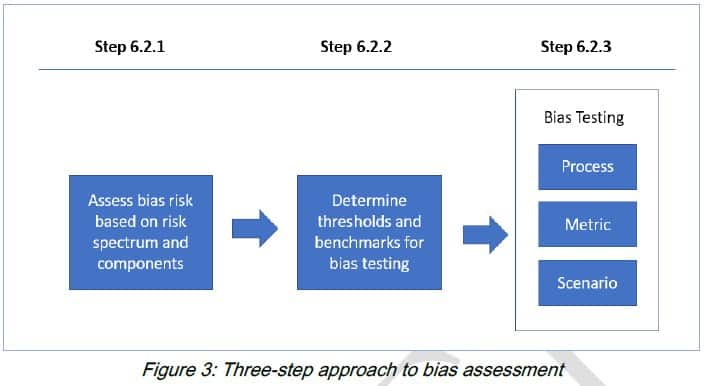

Secondly, DoT defined bias assessment with a three-step approach, which begins with assessing bias risk based on the risk spectrum and components, then determining the threshold and benchmarks for bias testing, and then finally testing for bias with metrics.

Secondly, DoT defined bias assessment with a three-step approach, which begins with assessing bias risk based on the risk spectrum and components, then determining the threshold and benchmarks for bias testing, and then finally testing for bias with metrics.

What are the components of AI that will go under the scanner during its bias testing?

What are the components of AI that will go under the scanner during its bias testing?The draft standards document said that in context with specific AI system parameters such as its public impact, the quality of data has to be evaluated. Separately, AI models have to be tested independently and a list of adverse incident relating to AI bias has to be collated.

What happens after the testing?After the evaluation, the draft standards document said that a report will be generated which would contain details of "assumptions, observations, metric values, risk profiles, types of test performed limitations etc".

How does the industry see the draft standards?Industry body NASSCOM, in a response to the draft standards earlier this month, said the Telecom Engineering Centre of the DoT should collaborate with TRAI to test the efficacy of the draft standards in the telecom sector.

NASSCOM also suggested that personal identifiable information data that is used for training an AI model should be obfuscated during bias testing.

"The draft standards may consider a labeling requirement based on the AI fairness score. This may help to reduce the information asymmetry among end-users," NASSCOM said.

Discover the latest Business News, Sensex, and Nifty updates. Obtain Personal Finance insights, tax queries, and expert opinions on Moneycontrol or download the Moneycontrol App to stay updated!

Find the best of Al News in one place, specially curated for you every weekend.

Stay on top of the latest tech trends and biggest startup news.