Google announced a range of new features across its suite of products, including Google Search, Maps, and Shopping, at its annual Search On event on September 28 as the tech giant aims to make its traditional search experience more visual and interactive to users.

"We're going far beyond the search box to create search experiences that work more like our minds, and that are as multidimensional as we are as people," said Prabhakar Raghavan, Senior Vice-President, Google, during the event.

In this new era of search, people will be able to find exactly what they are looking for by combining images, sounds, text and speech, Raghavan added. "We call this making search more natural and intuitive," he said.

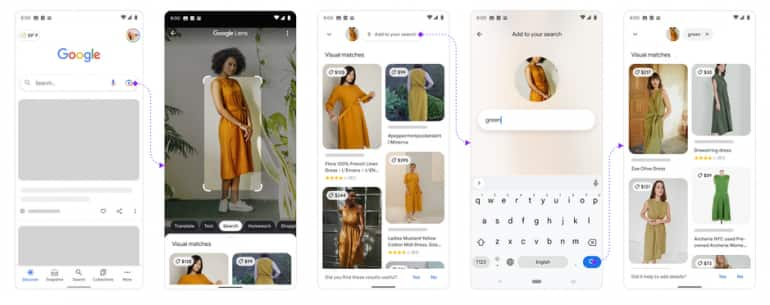

First, Google's multi search feature - that allows users to search using text and images at the same time - is being expanded to over 70 languages in the coming months. The feature, which is currently available in English language globally, lets people take a picture using Google Lens and add a text query to it to get information about the object present in the picture.

Google's Multi search feature

Google's Multi search feature

The company is also rolling out a feature called "multisearch near me" in English in the United States later this year.

The feature, first previewed at Google I/O in May, will let users take a picture or a screenshot of products in categories such as food, apparel and home goods and add a 'near me' text query to browse through nearby local retailers or restaurants that may offer them. The feature will also start surfacing bakeries later this year, Google said.

"The age of visual search is here... a camera is a powerful way to access information and understand the world around you — so much so that your camera is your next keyboard," Raghavan said.

In the coming months, Google will showcase information on what makes a place unique by analysing images and people reviews through machine learning to help users preview and evaluate restaurants before they visit. The firm is also expanding its coverage of digital menus, apart from making them more "visually rich and reliable".

These menus will showcase the most popular dishes in the restaurant and hopefully provide information on different dietary options, starting with vegetarian and vegan.

In terms of shopping, one can access a visual feed of products, research tools and nearby inventory related to any specific product. Users can also "shop the look" to help them assemble an outfit. Meanwhile, Google Search will also feature 3D visuals of shoes, starting with sneakers, soon.

Improved Lens translation

During the event, Raghavan previewed an improved Lens translation experience wherein they are now able to blend translated text into the background image so that it looks and feels more natural to users. The experience is expected to launch later this year.

He said that people already use Google to translate text in images over one billion times a month, across more than 100 languages, enabling them to read menus, storefronts, documents and signs among others in other languages.

Google is also changing the way it will display some of its search results to users, by bringing together relevant content from a variety of sources including online creators and a range of formats such as text, images or videos.

In the coming months, Google said it will surface keywords and topic options to complete the question even as users begin to type in the query apart from providing a visual preview of information to people. It will also introduce new search experiences to help users explore topics in a more natural fashion.

Besides this, Google's iOS app will start showing shortcuts right under the search bar that will surface some of its existing visual search capabilities such as the ability to shop their screenshots, and translate text with their camera among others.

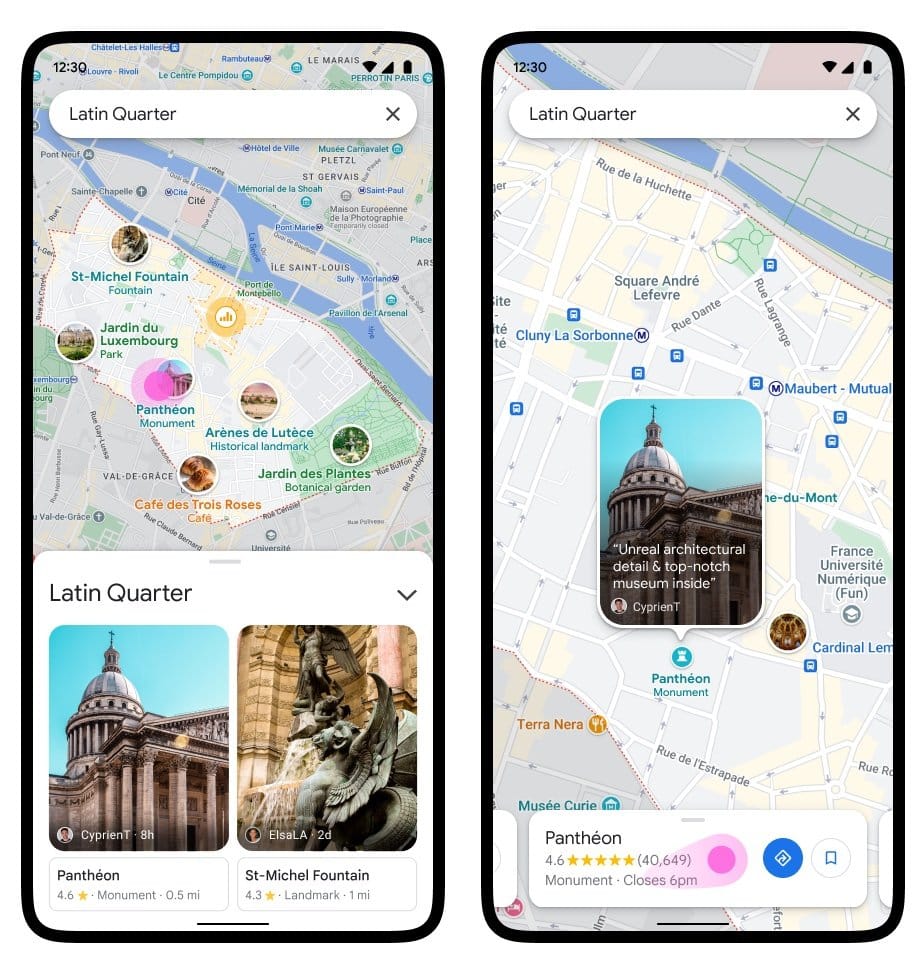

New Google Maps features

A new neighbourhood vibe feature will enable people to select a neighbourhood and see the most popular spots through photos and information from the Google Maps community right on the map. It will be available across the world on Android and iOS in the coming months.

Google Maps' Neighborhood Vibe feature

Google Maps' Neighborhood Vibe feature

Google is launching over 250 photorealistic aerial views of global monuments and landmarks spanning from Tokyo Tower (Tokyo) to the Acropolis (Athens), as part of the immersive view feature that was previewed at Google I/O event in May.

With this view, one can see what an area looks like at different times of the day, view weather and traffic conditions along with the area's busy spots. The feature will be available in five cities - Los Angeles, London, New York, San Francisco and Tokyo - on Android and iOS in the coming months.

Live View feature is also getting visual search capabilities that will allow users to explore nearby places in a faster manner. The improved experience will be available in six cities -- London, Los Angeles, New York, San Francisco, Paris and Tokyo -- in the coming months on Android and iOS.

Meanwhile, the company's eco-friendly routing feature - currently available in the United States, Canada and Europe - will soon expand to third party developers through the Google Maps platform.

This essentially means that firms such as delivery or ridesharing services will have the option to enable eco-friendly routing in their apps and measure fuel consumption and savings for a single trip, multiple trips, or even across their entire fleet to improve performance.

Developers will also have the ability to select an engine type to get the most accurate fuel or energy efficiency estimates when choosing an eco-friendly route, the company said.

Discover the latest Business News, Sensex, and Nifty updates. Obtain Personal Finance insights, tax queries, and expert opinions on Moneycontrol or download the Moneycontrol App to stay updated!

Find the best of Al News in one place, specially curated for you every weekend.

Stay on top of the latest tech trends and biggest startup news.