“Generative AI is the defining technology of our time. Blackwell is the engine to power this new industrial revolution. Working with the most dynamic companies in the world, we will realise the promise of AI for every industry.” Jensen Huang, founder and CEO of NVIDIA announced an all-new platform that is all set to revolutionise computing. Huang made this announcement at the NVIDIA developer conference in San Francisco on Monday, March 18, 2024.

Blackwell has been named in honour of David Harold Blackwell — a mathematician who specialised in game theory and statistics. The new architecture succeeds the NVIDIA Hopper architecture, launched two years ago. NVIDIA has already become the preferred supplier for tech companies aiming to ride the AI boom. This announcement is likely to further the company’s position.

According to Nvidia, Blackwell is all set to fuel accelerated computing and Generative AI. Blackwell’s six revolutionary technologies together enable AI training and real-time LLM inference for models scaling up to 10 trillion parameters.

NVIDIA hasn’t revealed the price point but confirmed Blackwell-based products will be available from partners starting later this year. AWS, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure will be among the first cloud service providers to offer Blackwell-powered instances, as will NVIDIA Cloud Partner program companies Applied Digital, CoreWeave, Crusoe, IBM Cloud and Lambda. It’s part of the company’s blueprint to become more of a platform provider like Microsoft or Apple on which other companies can build software. Jensen Huang sums up this vision - “Blackwell’s not a chip, it’s the name of a platform,”

The new Blackwell platform promises significantly better AI computing performance, and better energy efficiency. The company has confirmed that the new Blackwell chips are between seven and 30 times faster than the H100, while consuming a fraction of the power (about 25 times less). This quantum leap was made possible by the integration of 208 billion transistors, a massive increase compared to the H100’s 80 billion. NVIDIA achieved this feat by interconnecting two expansive chip dies, enabling lightning-fast communication at speeds of up to 10 terabytes per second.

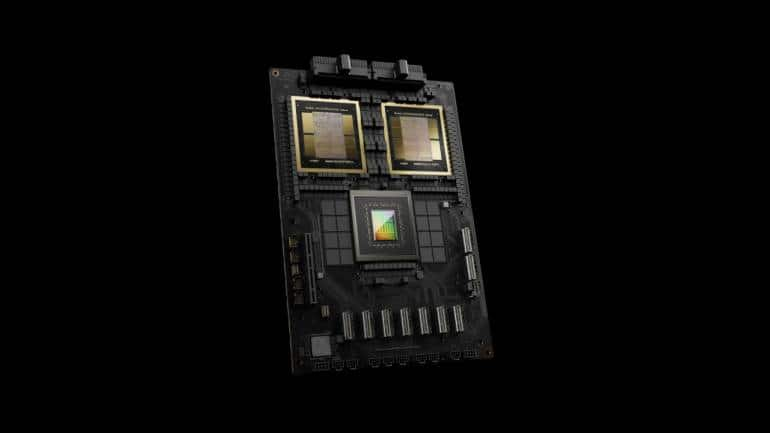

NVIDIA GB200 Grace Blackwell Superchip.

NVIDIA GB200 Grace Blackwell Superchip.The Blackwell platform has already received high power endorsements with everyone from Satya Nadella to Sundar Pichai to Mark Zuckerberg acknowledging this as a huge step forward. Sam Altman, CEO of OpenAI also weighed in - “Blackwell offers massive performance leaps, and will accelerate our ability to deliver leading-edge models. We’re excited to continue working with NVIDIA to enhance AI compute.” The new chip is all set to spark a new scramble among Tech companies that follows the same rush and waiting times for NVIDIA’s H100 AI GPUs.

Discover the latest Business News, Sensex, and Nifty updates. Obtain Personal Finance insights, tax queries, and expert opinions on Moneycontrol or download the Moneycontrol App to stay updated!

Find the best of Al News in one place, specially curated for you every weekend.

Stay on top of the latest tech trends and biggest startup news.