In Pics: Meet GPT-4, the most advanced AI language model yet

GPT-4 is a groundbreaking development in AI language models, promising to transform the way humans communicate and interact with machines.

1/10

GPT-4 is a groundbreaking development in AI language models, promising to transform the way humans communicate and interact with machines. GPT-4 features advanced capabilities and cutting-edge technology that will push the boundaries of what is possible with AI.

2/10

OpenAI has been releasing GPT language models since 2018. GPT-3, the third such release, was the most advanced model when ChatGPT, its chatbot product was released in 2022.

3/10

The new system is a “multimodal” model, which means it can accept images as well as text as inputs, allowing users to ask questions about pictures.

4/10

GPT-4 is not just a language model, it is also a vision model.

5/10

GPT-4 is able to handle longer text inputs than its predecessors, accepting and producing up to 25,000 words as opposed to ChaptGPT’s 3,000 words.

6/10

According to OpenAI, the system may also respond to queries based on the content of an image.

7/10

When asked to describe why an image of a squirrel with a camera was funny. GPT-4’s responses were: “We don’t expect them to use a camera or act like a human”.

8/10

When prompted with a photo of a hand-drawn and rudimentary sketch of a website to GPT-4, it created a working website based on the drawing.

9/10

The new model of the bot is trained to refuse inappropriate questions.

10/10

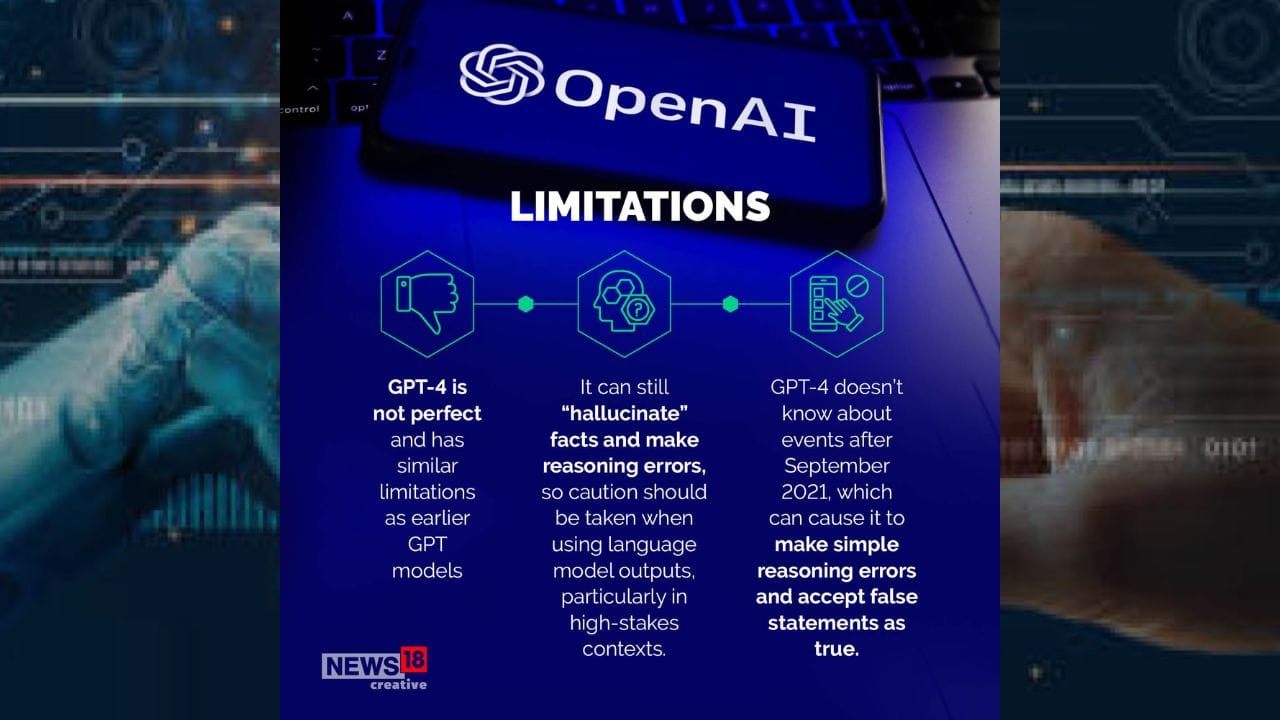

GPT-4 doesn’t know about events after September 2021, which can cause it to make simple reasoning errors and accept false statements as true.

Discover the latest Business News, Budget 2025 News, Sensex, and Nifty updates. Obtain Personal Finance insights, tax queries, and expert opinions on Moneycontrol or download the Moneycontrol App to stay updated!